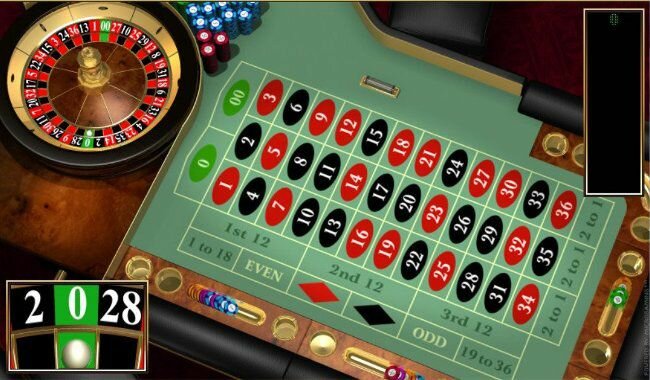

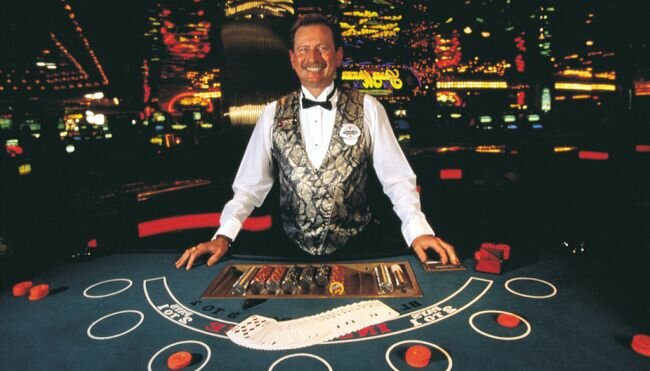

Popular Gambling Games: What You Need to Know Before Starting

Gambling games have always captured people's attention, enticing them with emotional and financial trials. From traditional casinos to online platforms, the gaming world is constantly expanding, offering a wide range of opportunities for players. However, before embarking on your adventure with gambling games, it is important to have a clear understanding of their nature, rules, and risks. Let's explore some of the […]